| Age | Commit message (Collapse) | Author | Files | Lines |

|---|

|

This PR adds lowering of `gpu.dynamic_shared_memory` to rocdl target.

|

|

Fixes a crash in `TransposeOp::inferReturnTypeComponents()` when the

supplied permutation tensor is rank-0.

Also removes some dead code from the type inference function.

Fix https://github.com/llvm/llvm-project/issues/74237

|

|

|

|

Continuation of https://github.com/llvm/llvm-project/pull/74247 to fix

https://github.com/llvm/llvm-project/issues/56962. Fixes verifier for

(Integer Attr):

```mlir

llvm.mlir.constant(1 : index) : f32

```

and (Dense Attr):

```mlir

llvm.mlir.constant(dense<100.0> : vector<1xf64>) : f32

```

## Integer Attr

The addition that this PR makes to `LLVM::ConstantOp::verify` is meant

to be exactly verifying the code in

`mlir::LLVM::detail::getLLVMConstant`:

https://github.com/llvm/llvm-project/blob/9f78edbd20ed922cced9482f7791deb9899a6d82/mlir/lib/Target/LLVMIR/ModuleTranslation.cpp#L350-L353

One failure mode is when the `type` (`llvm.mlir.constant(<value>) :

<type>`) is not an `Integer`, because then the `cast` in

`getIntegerBitWidth` will crash:

https://github.com/llvm/llvm-project/blob/dca432cb7b1c282f5dc861095813c4f40f109619/llvm/include/llvm/IR/DerivedTypes.h#L97-L99

So that's now caught in the verifier.

Apart from that, I don't see anything we could check for. `sextOrTrunc`

means "Sign extend or truncate to width" and that one is quite

permissive. For example, the following doesn't have to be caught in the

verifier as it doesn't crash during `mlir-translate -mlir-to-llvmir`:

```mlir

llvm.func @main() -> f32 {

%cst = llvm.mlir.constant(100 : i64) : f32

llvm.return %cst : f32

}

```

## Dense Attr

Crash if not either a MLIR Vector type or one of these:

https://github.com/llvm/llvm-project/blob/9f78edbd20ed922cced9482f7791deb9899a6d82/mlir/lib/Target/LLVMIR/ModuleTranslation.cpp#L375-L391

|

|

This patch adds a target_features (TargetFeaturesAttr) to the LLVM

dialect to allow setting and querying the features in use on a function.

The motivation for this comes from the Arm SME dialect where we would

like a convenient way to check what variants of an operation are

available based on the CPU features.

Intended usage:

The target_features attribute is populated manually or by a pass:

```mlir

func.func @example() attributes {

target_features = #llvm.target_features<["+sme", "+sve", "+sme-f64f64"]>

} {

// ...

}

```

Then within a later rewrite the attribute can be checked, and used to

make lowering decisions.

```c++

// Finds the "target_features" attribute on the parent

// FunctionOpInterface.

auto targetFeatures = LLVM::TargetFeaturesAttr::featuresAt(op);

// Check a feature.

// Returns false if targetFeatures is null or the feature is not in

// the list.

if (!targetFeatures.contains("+sme-f64f64"))

return failure();

```

For now, this is rather simple just checks if the exact feature is in

the list, though it could be possible to extend with implied features

using information from LLVM.

|

|

Add support for frame pointers in MLIR.

---------

Co-authored-by: Markus Böck <markus.boeck02@gmail.com>

Co-authored-by: Christian Ulmann <christianulmann@gmail.com>

|

|

Translate debug emission kind into LLVM (the importer already supports

this).

|

|

Updates patterns for flattening `vector.transfer_read` by relaxing the

requirement that the "collapsed" indices are all zero. This enables

collapsing cases like this one:

```mlir

%2 = vector.transfer_read %arg4[%c0, %arg0, %arg1, %c0] ... :

memref<1x43x4x6xi32>, vector<1x2x6xi32>

```

Previously only the following case would be consider for collapsing

(all indices are 0):

```mlir

%2 = vector.transfer_read %arg4[%c0, %c0, %c0, %c0] ... :

memref<1x43x4x6xi32>, vector<1x2x6xi32>

```

Also adds some new comments and renames the `firstContiguousInnerDim`

parameter as `firstDimToCollapse` (the latter better matches the actual

meaning).

Similar updates for `vector.transfer_write` will be implemented in a

follow-up patch.

|

|

This PR creates the wrapper class AffineForOp and adds a testcase for

it. A testcase for the AffineLoadOp is also added.

|

|

When two `complex.bitcast` ops are folded and the resulting bitcast is a

non-complex -> non-complex bitcast, an `arith.bitcast` should be

generated. Otherwise, the generated `complex.bitcast` op is invalid.

Also remove a pattern that convertes non-complex -> non-complex

`complex.bitcast` ops to `arith.bitcast`. Such `complex.bitcast` ops are

invalid and should not appear in the input.

Note: This bug can only be triggered by running with `-debug` (which

will should intermediate IR that does not verify) or with

`MLIR_ENABLE_EXPENSIVE_PATTERN_API_CHECKS` (#74270).

|

|

Add:

* an Op for 'cp.async.mbarrier.arrive', targeting the

nvvm_cp_async_mbarrier_arrive* family of intrinsics.

* The 'noinc' intrinsic property is modelled as a default-valued-attr of

type I1.

* Test cases are added to verify the Op as well as the intrinsic

lowering.

Signed-off-by: Durgadoss R <durgadossr@nvidia.com>

|

|

This PR introduce `nvvm.fence.proxy` OP for the following cases:

```

nvvm.fence.proxy { kind = #nvvm.proxy_kind<alias>}

nvvm.fence.proxy { kind = #nvvm.proxy_kind<async>}

nvvm.fence.proxy { kind = #nvvm.proxy_kind<async.global>}

nvvm.fence.proxy { kind = #nvvm.proxy_kind<async.shared>, space = #nvvm.shared_space<cta>}

nvvm.fence.proxy { kind = #nvvm.proxy_kind<async.shared>, space = #nvvm.shared_space<cluster>}

```

|

|

(#74075)

GPU dialect has `#gpu.address_space<workgroup>` for shared memory of

NVGPU (address space =3). Howeverm when IR combine NVGPU and GPU

dialect, `nvgpu-to-nvvm` pass fails due to missing attribute conversion.

This PR adds `populateGpuMemorySpaceAttributeConversions` to

nvgou-to-nvvm lowering, so we can use `#gpu.address_space<workgroup>`

`nvgpu-to-nvvm` pass

|

|

This PR introduces DIGlobalVariableAttr and

DIGlobalVariableExpressionAttr so that ModuleTranslation can emit the

required metadata needed for debug information about global variable.

The translator implementation for debug metadata needed to be refactored

in order to allow translation of nodes based on MDNode

(DIGlobalVariableExpressionAttr and DIExpression) in addition to

DINode-based nodes.

A DIGlobalVariableExpressionAttr can now be passed to the GlobalOp

operation directly and ModuleTranslation will create the respective

DIGlobalVariable and DIGlobalVariableExpression nodes. The compile unit

that DIGlobalVariable is expected to be configured with will be updated

with the created DIGlobalVariableExpression.

|

|

Updates "flatten vector" patterns to support more cases, namely Ops that

read/write vectors with leading unit dims. For example:

```mlir

%0 = vector.transfer_read %arg0[%c0, %c0, %c0, %c0] ... :

memref<5x4x3x2xi8, strided<[24, 6, 2, 1], offset: ?>>, vector<1x1x2x2xi8>

```

Currently, the `vector.transfer_read` above would not be flattened. With

this

change, it will be rewritten as follows:

```mlir

%collapse_shape = memref.collapse_shape %arg0 [[0, 1, 2, 3]] :

memref<5x4x3x2xi8, strided<[24, 6, 2, 1], offset: ?>>

into memref<120xi8, strided<[1], offset: ?>>

%0 = vector.transfer_read %collapse_shape[%c0] ... :

memref<120xi8, strided<[1], offset: ?>>, vector<4xi8>

%1 = vector.shape_cast %0 : vector<4xi8> to vector<1x1x2x2xi8>

```

`hasMatchingInnerContigousShape` is generalised and renamed as

`isContiguousSlice` to better match the updated functionality. A few

test names are updated to better highlight what case is being exercised.

|

|

This moves the SME tile vector.print lowering from

`-convert-arm-sme-to-scf` to `-convert-vector-to-arm-sme`. This seems

like a more logical place, as this is lowering a vector op to ArmSME,

and it also prevents vector.print from blocking tile allocation.

|

|

Fixes https://github.com/llvm/llvm-project/issues/73382.

This PR suggests to replace two assertions that were introduced in

https://github.com/llvm/llvm-project/commit/adabce41185910227ca276a1cfd22e76443dd238

(https://reviews.llvm.org/D135748). According to the enum definition of

`NotSpeculatable`, an op that invokes undefined behavior is

`NotSpeculatable`.

https://github.com/llvm/llvm-project/blob/0c06e8745f131d867c566f4d35a7a04e24b4a075/mlir/include/mlir/Interfaces/SideEffectInterfaces.h#L248-L258

and both `tensor.dim` and `memref.dim` state that "If the dimension

index is out of bounds, the behavior is undefined."

So therefore it seems to me that `DimOp::getSpeculatability()` should

return `NotSpeculatable` if the dimension index is out of bounds.

The added test is just a simplified version of

https://github.com/llvm/llvm-project/issues/73382.

|

|

Fixes one of the cases of

https://github.com/llvm/llvm-project/issues/56962.

This PR basically moves some code from

`mlir::LLVM::detail::getLLVMConstant`

([source](https://github.com/llvm/llvm-project/blob/9f78edbd20ed922cced9482f7791deb9899a6d82/mlir/lib/Target/LLVMIR/ModuleTranslation.cpp#L354-L371))

over to the verifier of `LLVM::ConstantOp`. For now, I focused just on

the case where the attribute is a float and ignored the integer case of

https://github.com/llvm/llvm-project/issues/56962. Note that without

this patch, both added tests will crash inside `getLLVMConstant` during

`mlir-translate -mlir-to-llvmir`.

|

|

The existing lowering of tosa.conv2d emits a separate linalg.generic

operator to add the bias after computing the computation.

This change eliminates that additional step by using the generated

linalg.conv_2d_* operator by using the bias value as the input to the

linalg.conv_2d operation.

Rather than:

%init = tensor.empty()

%conv = linalg.conv_2d ins(%A, %B) %outs(%init)

%init = tensor.empty()

%bias = linalg.generic ins(%conv, %bias) outs(%init2) {

// perform add operation

}

The lowering now produces:

%init = tensor.empty()

%bias_expanded = linalg.broadcast ins(%bias) outs(%init)

%conv = linalg.conv_2d ins(%A, %B) %outs(%bias)

This is the same strategy as

https://github.com/llvm/llvm-project/pull/73049 applied to convolutions.

|

|

-Fix case where an op is scheduled in stage 0 and used with a distance

of 1

-Fix case where we don't peel the epilogue and a value not part of the

last stage is used outside the loop.

|

|

|

|

Without folding the result of the initial tensor.dim, the

ReifyResultShapes implementation would be incorrect because it would

return a dynamic shape for a static result shape.

|

|

|

|

The `conv_2d_ngchw_fgchw` Op implements 2d grouped convolution with

dimensions ordered as given in the name. However, the current

implementation orders weights as `gfchw` instead of `fgchw`. This was

already pointed out in an old phabricator revision which never landed:

https://reviews.llvm.org/D150064

This patch

1) Adds a new op `conv_2d_ngchw_gfchw`

2) Fixes the dimension ordering of the old op `conv_2d_ngchw_fgchw`

3) Adds tests with non-dynamic dimensions so that it's easier to

understand.

|

|

This adds an operation for concatenating ranked tensors along a static

dimension, as well as a decomposition mirroring the existing lowering

from TOSA to Tensor. This offers a convergence point for "input" like

dialects that include various lowerings for concatenation operations,

easing later analysis. In the future, this op can implement the

necessary interfaces for tiling, as well as potentially add conversions

to some kind of linalg and/or memref counterpart.

This patch adds the op, the decomposition, and some basic

folding/canonicalization. Replacing lowerings with the op (such as the

TOSA lowering) will come as a follow up.

See

https://discourse.llvm.org/t/rfc-tensor-add-a-tensor-concatenate-operation/74858

|

|

If we can infer statically that there are no incomplete tiles, we can

remove the optional padding operand.

Fixes https://github.com/openxla/iree/issues/15417

|

|

At the moment the logic to tile and vectorize `linalg.matmul` is

duplicated in multiple test files:

* matmul.mlir

* matmul_mixed_ty.mlir

Instead, this patch uses `transform.foreach` to apply the same sequence

to multiple functions within the same test file (e.g. `matmul_f32` and

`matmul_mixed_ty` as defined in the original files). This allows us to

merge relevant test files.

|

|

The lowering of tosa.conv2d produces an illegal tensor.empty operation

where the number of inputs do not match the number of dynamic dimensions

in the output type.

The fix is to base the generation of tensor.dim operations off the

result type of the conv2d operation, rather than the input type. The

problem and fix are very similar to this fix

https://github.com/llvm/llvm-project/pull/72724

but for convolution.

|

|

The current lowering of tosa.fully_connected produces a linalg.matmul

followed by a linalg.generic to add the bias. The IR looks like the

following:

%init = tensor.empty()

%zero = linalg.fill ins(0 : f32) outs(%init)

%prod = linalg.matmul ins(%A, %B) outs(%zero)

// Add the bias

%initB = tensor.empty()

%result = linalg.generic ins(%prod, %bias) outs(%initB) {

// add bias and product

}

This has two down sides:

1. The tensor.empty operations typically result in additional

allocations after bufferization

2. There is a redundant traversal of the data to add the bias to the

matrix product.

This extra work can be avoided by leveraging the out-param of

linalg.matmul. The new IR sequence is:

%init = tensor.empty()

%broadcast = linalg.broadcast ins(%bias) outs(%init)

%prod = linalg.matmul ins(%A, %B) outs(%broadcast)

In my experiments, this eliminates one loop and one allocation (post

bufferization) from the generated code.

|

|

operators (#72715)

TOSA operators consumed by non-TOSA ops generally do not have their

types inferred, as that would alter the types expected by their

consumers. This prevents type refinement on many TOSA operators when the

IR contains a mix of dialects.

This change modifies tosa-infer-shapes to update the types of all TOSA

operators during inference. When a consumer of that TOSA op is not safe

to update, a tensor.cast is inserted back to the original type. This

behavior is similar to how TOSA ops consumed by func.return are handled.

This allows for more type refinement of TOSA ops, and the additional

tensor.cast operators may be removed by later canonicalizations.

|

|

This does the same as #72142 for vector.transfer_write. Previously the

pattern would silently drop the mask.

|

|

This folds transpose(shape_cast) into a new shape_cast, when the

transpose just permutes a unit dim from the result of the shape_cast.

Example:

```

%0 = vector.shape_cast %vec : vector<[4]xf32> to vector<[4]x1xf32>

%1 = vector.transpose %0, [1, 0] : vector<[4]x1xf32> to vector<1x[4]xf32>

```

Folds to:

```

%0 = vector.shape_cast %vec : vector<[4]xf32> to vector<1x[4]xf32>

```

This is an (alternate) fix for lowering matmuls to ArmSME.

|

|

This adds a `only-if-required-by-ops` flag to the `enable-arm-streaming`

pass. This flag defaults to `false` (which preserves the original

behaviour), however, if set to `true` the pass will only add the

selected ZA/streaming mode to functions that contain ops that implement

`ArmSMETileOpInterface`.

This simplifies enabling these modes, as we can now first try lowering

ops to ArmSME, then only if we succeed, add the relevant function

attributes.

|

|

This is a follow-up of #70040 in which the test updated here was missed.

Includes a few additional NFC changes in preparation for extending this

test.

|

|

This PR introduced `cp.async.bulk.commit.group` op.

|

|

When cloning an operation with a region, the builder was currently

notifying about the insertion of the cloned operations inside the region

before the cloned operation itself.

When using cloning inside rewrite pass, this could cause issues if a

pattern is expected to be applied on a cloned parent operation before

trying to apply patterns on the cloned operations it contains (the

patterns are attempted in order of notifications for the cloned

operations).

|

|

This patch refactors tests for:

vector.contract -> vector.outerproduct

for matvec operations (b += Ax). Summary of changes:

* add 2 missing cases (masked + scalable) when the operation kind is

`maxf`.

This is a part of a larger effort to add cases with scalable vectors to

tests for the Vector dialect.

Implements #72834.

|

|

from position array for slice-driven loop. (#73986)

|

|

type/attr classes (#72189)

This patch expose the type and attribute names in C++ as methods in the

`AbstractType` and `AbstractAttribute` classes, and keep a map of names

to `AbstractType` and `AbstractAttribute` in the `MLIRContext`. Type and

attribute names should be unique.

It adds support in ODS to generate the `getName` methods in

`AbstractType` and `AbstractAttribute`, through the use of two new

variables, `typeName` and `attrName`. It also adds names to C++-defined

type and attributes.

|

|

The idea is similar to vector.maskedload + vector.store emulation. What

the emulation does is:

1. Get a compressed mask and load the data from destination.

2. Bitcast the data to original vector type.

3. Select values between `op.valueToStore` and the data from load using

original mask.

4. Bitcast the new value and store it to destination using compressed

masked.

|

|

This patch adds a rewrite pattern for `vector.gather` over a strided

memref like the following:

```mlir

%subview = memref.subview %arg0[0, 0] [100, 1] [1, 1] :

memref<100x3xf32> to memref<100xf32, strided<[3]>>

%gather = vector.gather %subview[%c0] [%idxs], %cst_0, %cst :

memref<100xf32, strided<[3]>>, vector<4xindex>, vector<4xi1>, vector<4xf32>

into vector<4xf32>

```

After the pattern added in this patch:

```mlir

%collapse_shape = memref.collapse_shape %arg0 [[0, 1]] :

memref<100x3xf32> into memref<300xf32>

%1 = arith.muli %arg3, %cst : vector<4xindex>

%gather = vector.gather %collapse_shape[%c0] [%1], %cst_1, %cst_0 :

memref<300xf32>, vector<4xindex>, vector<4xi1>, vector<4xf32>

into vector<4xf32>

```

Fixes https://github.com/openxla/iree/issues/15364.

|

|

Fixes https://github.com/llvm/llvm-project/issues/64269.

With this patch, calling `mlir-opt "-convert-vector-to-scf=full-unroll

target-rank=0"` on

```mlir

func.func @main(%vec : vector<2xi32>) {

%alloc = memref.alloc() : memref<4xi32>

%c0 = arith.constant 0 : index

vector.transfer_write %vec, %alloc[%c0] : vector<2xi32>, memref<4xi32>

return

}

```

will result in

```mlir

module {

func.func @main(%arg0: vector<2xi32>) {

%c0 = arith.constant 0 : index

%c1 = arith.constant 1 : index

%alloc = memref.alloc() : memref<4xi32>

%0 = vector.extract %arg0[0] : i32 from vector<2xi32>

%1 = vector.broadcast %0 : i32 to vector<i32>

vector.transfer_write %1, %alloc[%c0] : vector<i32>, memref<4xi32>

%2 = vector.extract %arg0[1] : i32 from vector<2xi32>

%3 = vector.broadcast %2 : i32 to vector<i32>

vector.transfer_write %3, %alloc[%c1] : vector<i32>, memref<4xi32>

return

}

}

```

I've also tried to proactively find other `target-rank=0` bugs, but

couldn't find any. `options.targetRank` is only used 8 times throughout

the `mlir` folder, all inside `VectorToSCF.cpp`. None of the other uses

look like they could cause a crash. I've also tried

```mlir

func.func @main(%vec : vector<2xi32>) -> vector<2xi32> {

%alloc = memref.alloc() : memref<4xindex>

%c0 = arith.constant 0 : index

%out = vector.transfer_read %alloc[%c0], %c0 : memref<4xindex>, vector<2xi32>

return %out : vector<2xi32>

}

```

with `"--convert-vector-to-scf=full-unroll target-rank=0"` and that also

didn't crash. (Maybe obvious. I have to admit that I'm not very familiar

with these ops.)

|

|

This reworks the ArmSME dialect to use attributes for tile allocation.

This has a number of advantages and corrects some issues with the

previous approach:

* Tile allocation can now be done ASAP (i.e. immediately after

`-convert-vector-to-arm-sme`)

* SSA form for control flow is now supported (e.g.`scf.for` loops that

yield tiles)

* ArmSME ops can be converted to intrinsics very late (i.e. after

lowering to control flow)

* Tests are simplified by removing constants and casts

* Avoids correctness issues with representing LLVM `immargs` as MLIR

values

- The tile ID on the SME intrinsics is an `immarg` (so is required to be

a compile-time constant), `immargs` should be mapped to MLIR attributes

(this is already the case for intrinsics in the LLVM dialect)

- Using MLIR values for `immargs` can lead to invalid LLVM IR being

generated (and passes such as -cse making incorrect optimizations)

As part of this patch we bid farewell to the following operations:

```mlir

arm_sme.get_tile_id : i32

arm_sme.cast_tile_to_vector : i32 to vector<[4]x[4]xi32>

arm_sme.cast_vector_to_tile : vector<[4]x[4]xi32> to i32

```

These are now replaced with:

```mlir

// Allocates a new tile with (indeterminate) state:

arm_sme.get_tile : vector<[4]x[4]xi32>

// A placeholder operation for lowering ArmSME ops to intrinsics:

arm_sme.materialize_ssa_tile : vector<[4]x[4]xi32>

```

The new tile allocation works by operations implementing the

`ArmSMETileOpInterface`. This interface says that an operation needs to

be assigned a tile ID, and may conditionally allocate a new SME tile.

Operations allocate a new tile by implementing...

```c++

std::optional<arm_sme::ArmSMETileType> getAllocatedTileType()

```

...and returning what type of tile the op allocates (ZAB, ZAH, etc).

Operations that don't allocate a tile return `std::nullopt` (which is

the default behaviour).

Currently the following ops are defined as allocating:

```mlir

arm_sme.get_tile

arm_sme.zero

arm_sme.tile_load

arm_sme.outerproduct // (if no accumulator is specified)

```

Allocating operations become the roots for the tile allocation pass,

which currently just (naively) assigns all transitive uses of a root

operation the same tile ID. However, this is enough to handle current

use cases.

Once tile IDs have been allocated subsequent rewrites can forward the

tile IDs to any newly created operations.

|

|

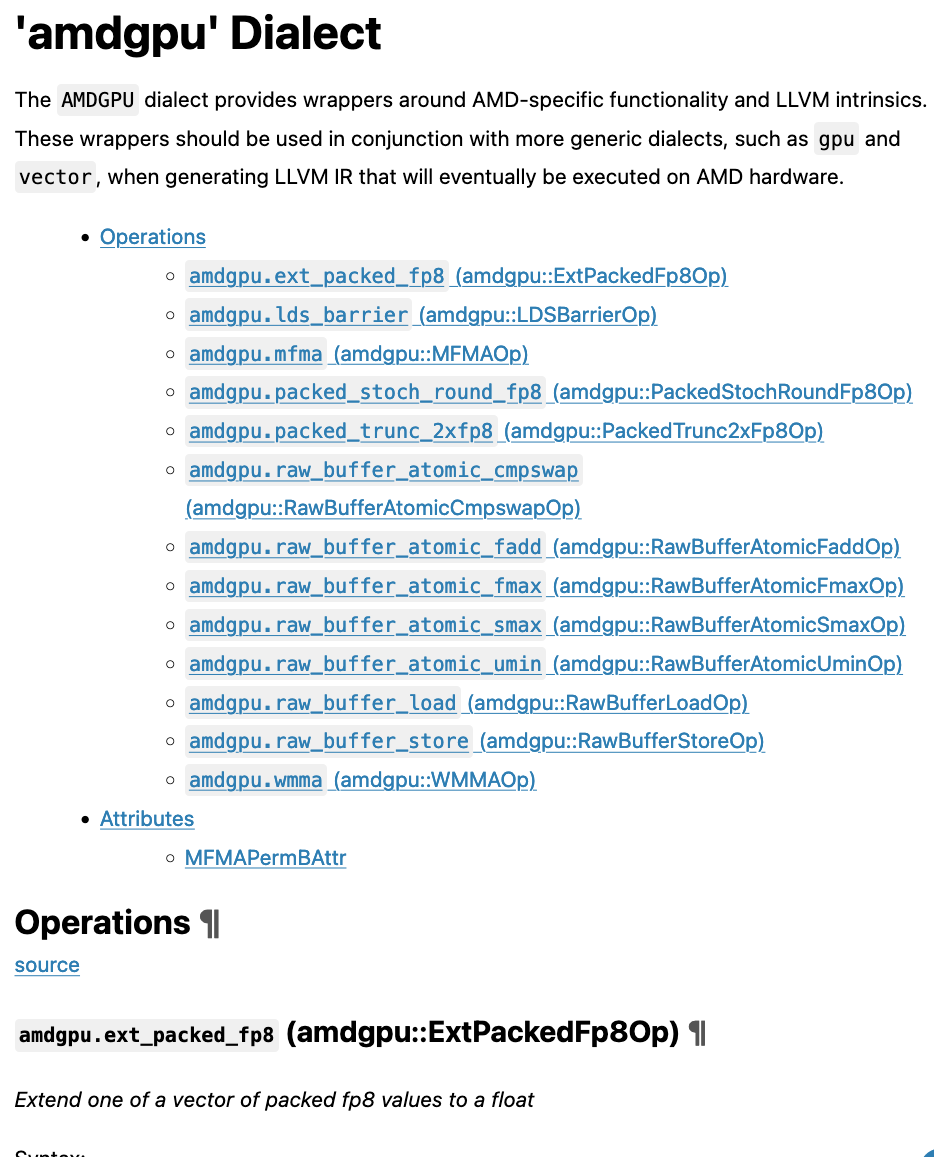

This patch suggests to change two things. Firstly, it adds a source link

above the generated operations docs (above the `emitOpDoc` calls). This

link will point directly to the source TableGen file for the group of

operations. For example, for the current

[`amdgpu`](https://mlir.llvm.org/docs/Dialects/AMDGPU/) page, the link

will add a source link below the "Operation definition" heading pointing

to

[`mlir/include/mlir/Dialect/AMDGPU/IR/AMDGPU.td`](https://github.com/llvm/llvm-project/blob/main/mlir/include/mlir/Dialect/AMDGPU/IR/AMDGPU.td).

The link is wrapped in a "op-definitions-source-link" class which could

allow for custom styling, but it also looks reasonable without custom

styling I think:

Secondly, this patch simplifies the header names such as "Operation

definition" and "Attribute definition" to "Operations" and "Attributes"

respectively. This is in line with manually defined subheadings on pages

such as the one for the

[`vector`](https://mlir.llvm.org/docs/Dialects/Vector/#operations)

dialect.

|

|

Out of curiosity, I ran [typos](https://github.com/crate-ci/typos)

against MLIR. It found two `CHECK:` typos (and many minor typos; which

I'm not gonna work on today).

|

|

Apart from the test itself, this patch also updates a few patterns to

fix how new VectorType(s) are created. Namely, it makes sure that

"scalability" is correctly propagated.

Regression tests will be updated seperately while auditing Vector

dialect tests in the context of scalable vectors:

* https://github.com/orgs/llvm/projects/23

|

|

Add missing constant propogation folder for IAddCarry and

[S|U]MulExtended. Due to currently missing constant value for

spirv.struct the folding is done using canonicalization patterns.

Implement additional folding when rhs is 0 for all ops and when rhs is 1

for UMulExt.

This helps for readability of lowered code into SPIR-V.

Part of work for #70704

|

|

(#73751)

Co-authored-by: Edgar <git@edgarluque.com>

|

|

Add missing constant propogation folder for [S|U]Mod, [S|U]Div, SRem

Implement additional folding when rhs is 1 for all ops.

This helps for readability of lowered code into SPIR-V.

Part of work for #70704

|

|

This PR introduce `setmaxregister.sync.aligned` Op to increase or

decrease the register size.

https://docs.nvidia.com/cuda/parallel-thread-execution/index.html#miscellaneous-instructions-setmaxnreg

|